Ever since generative AI (genAI) entered the financial and business zeitgeist, concerns have run rampant about the technology’s potential to hallucinate. In simple terms, hallucination is when a genAI model provides inaccurate or misleading information, usually with total confidence and conviction. And while there are various guardrails genAI tools can employ to combat hallucination, the majority of tools on the market today do not take adequate measures to prevent hallucination. Moreover, because generative AI is only as accurate and smart as the data it’s trained on, genAI tools trained on public or unvetted data are even more likely to generate false or misleading information.

While hallucination has limited consequences for the average individual using genAI for quick answers to internet queries, it can be catastrophic for organizations using generative AI for market or investment research. In recent years, an increasing number of companies and financial firms have been getting curious about the benefits of genAI tools—speeding up time to insight, helping uncover buried information, and saving time on repetitive, manual tasks, among others. However, many companies have run into significant issues with these tools, particularly when they are using a model that is trained on public web data or that does not employ adequate guardrails against misinformation.

That’s why, when selecting the right genAI tool for their business and investment needs, it’s crucial for firms to ensure that the tool has procedures in place to combat hallucinations. Furthermore, your tool should ideally be trained to understand financial and business language, as well as the nuances of your research process, so that it can truly support and accelerate, rather than hinder, your workflow.

Below, we cover the steps to combating genAI hallucination, as well as why this is critical for businesses and financial institutions. Finally, we discuss the AlphaSense genAI model and how it effectively prevents hallucinations and ensures that you get the most high-quality answers to your research queries.

Risks of GenAI Hallucination

For enterprise organizations and investors, the risks of genAI hallucination cannot be overstated.

Picture this: you are a professional conducting market research and decide to ask your genAI tool for specific market insights relevant to your research area. A tool prone to hallucination can confidently provide you with completely false or misleading information, and if you don’t confirm the answer’s accuracy, you run the risk of making a poor strategic decision or missing a key opportunity. This could have catastrophic implications in the long-term.

For an investor, hallucinations can produce erroneous analyses of a company’s financials, such as incorrect revenue projections, distorted valuations, or fabricated stock price trends. Acting on such false information can lead to misguided allocations, bring risk to your portfolio, and break due diligence protocols.

Relying on hallucinated data can also result in reputational damage if the unvetted information is utilized in publicly disclosed or client-facing presentations or reports. This can lead to eroded trust between your company and stakeholders or clients. Furthermore, using incorrect AI-generated information in filings, financial reports, or investment recommendations can lead to regulatory penalties, compliance violations, or lawsuits, particularly in heavily regulated industries like finance.

GenAI hallucinations can also reinforce bias, if inaccurate or skewed data is used to make generalized assumptions. This can lead to distorted market research or misled investment evaluations and ultimately negatively affect business outcomes.

Finally, relying on a genAI model that hallucinates will eventually put you at a disadvantage, relative to competitors who are using more accurate tools—over time, this can lead to losing market share and the competitive edge.

In order to mitigate all these risks, it’s important to select a generative AI tool that follows specific steps to combat hallucination and ensure the utmost accuracy in the data it generates.

Ways to Mitigate GenAI Hallucination

Ensure Your GenAI Model is Trained on High-Quality Data

One of the top ways to mitigate genAI hallucination is ensuring that the model is not trained on just publicly available web data, but is instead trained on premium, business-grade data. The internet is full of inaccurate information, and many generative AI models don’t have the ability to separate the truth from misinformation when they are scraping the web for answers. That’s why relying on genAI tools that are trained on public data puts organizations at high risk for inaccurate insights, even when the information generated sounds compelling.

When we compare consumer-grade genAI tools, such as ChatGPT or Perplexity, with purpose-built market intelligence genAI tools like AlphaSense, we see a stark contrast in the data quality each tool is able to generate. Consumer-grade tools are trained on public web data, which means they have the potential to generate false information found on the web. A tool like AlphaSense, however, is trained to think like an analyst and pulls premium financial and business data straight from our platform—including company documents, premium news sources, broker research, and expert interviews.This automatically provides a high degree of protection against false or misleading information.

Incorporate Citations or Other Verification Features

All generative AI models are capable of hallucination, but the ability to track the generated answer straight back to the source enables you to instantly verify the information and get additional context if needed.

Many genAI models lack citations or verification for their answers altogether—which should be a strong deterrent for enterprise organizations or investors who need to be able to verify their information. Some tools do provide citations, but they only cite the full documents from where they sourced the answer, which means you will need to dig through pages and pages of content to find the context you are looking for.

AlphaSense provides citations to exact snippets of text from where an answer is sourced, so that you can instantly get smart on the topic at hand and ensure that the answer came from a valid and reliable source.

Input Structured and Specific Queries

Sometimes, the easiest way to prevent hallucination in a genAI model is simply being as specific and prescriptive with your query as possible. Hallucination can sometimes be an effect of the model misunderstanding your question, so it’s important to be clear in your prompts.

When you give the model specific parameters, like time frames, industries, or particular data points, it reduces the likelihood of the AI straying into unsupported or fabricated information.

The more ambiguous a query is, the more likely the model is to generate hallucinated responses to fill gaps in understanding. By inputting structured queries, you narrow down the scope of what the model is supposed to address, minimizing the chances of misinformation. Clear, detailed questions make it easier for the AI to stay on track with factual information.

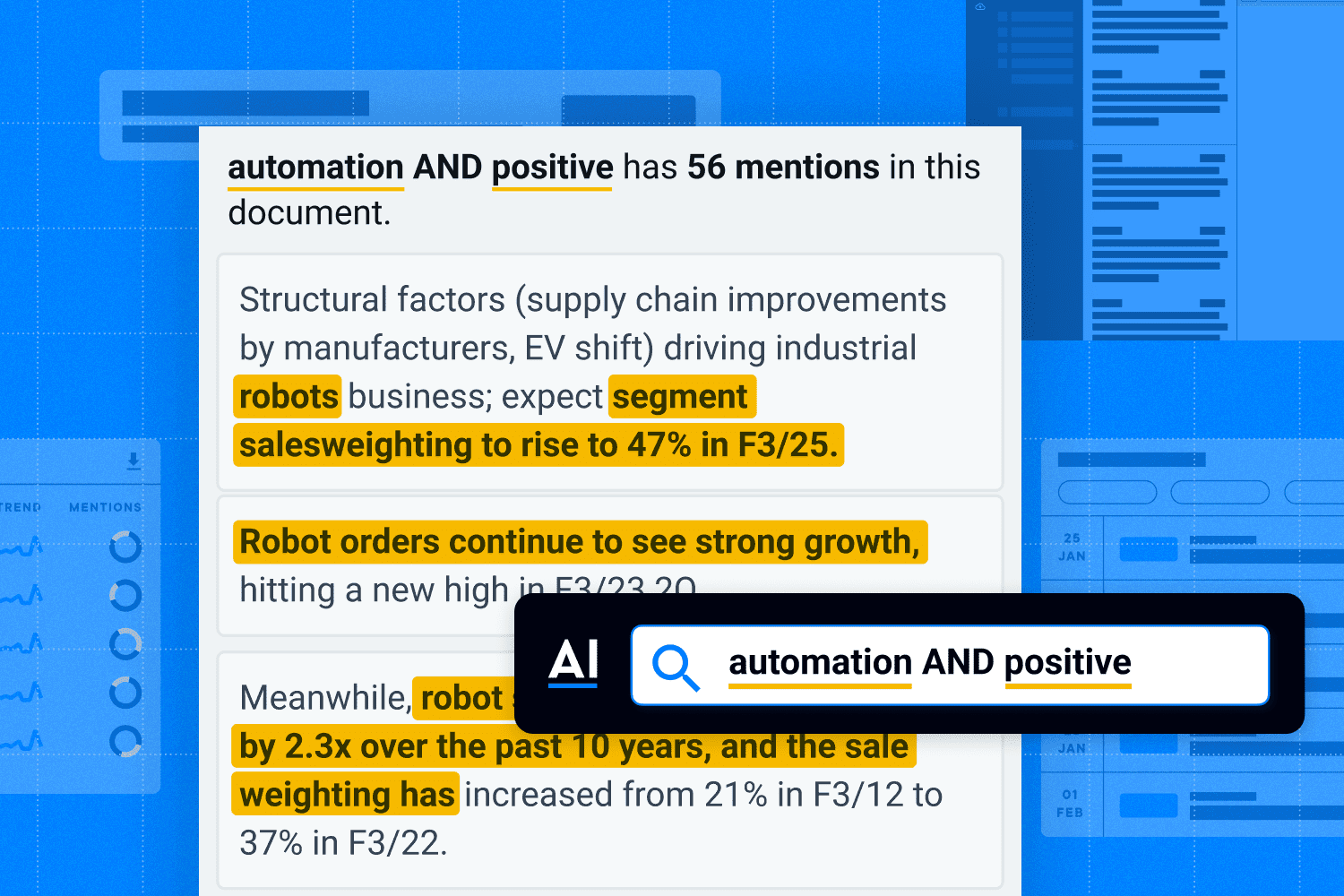

AlphaSense makes this easy with our Smart Synonym™ feature that understands the intent behind your keyword search and eliminates the need to search for specific long-form phrases or to search for multiple iterations of your keyword.

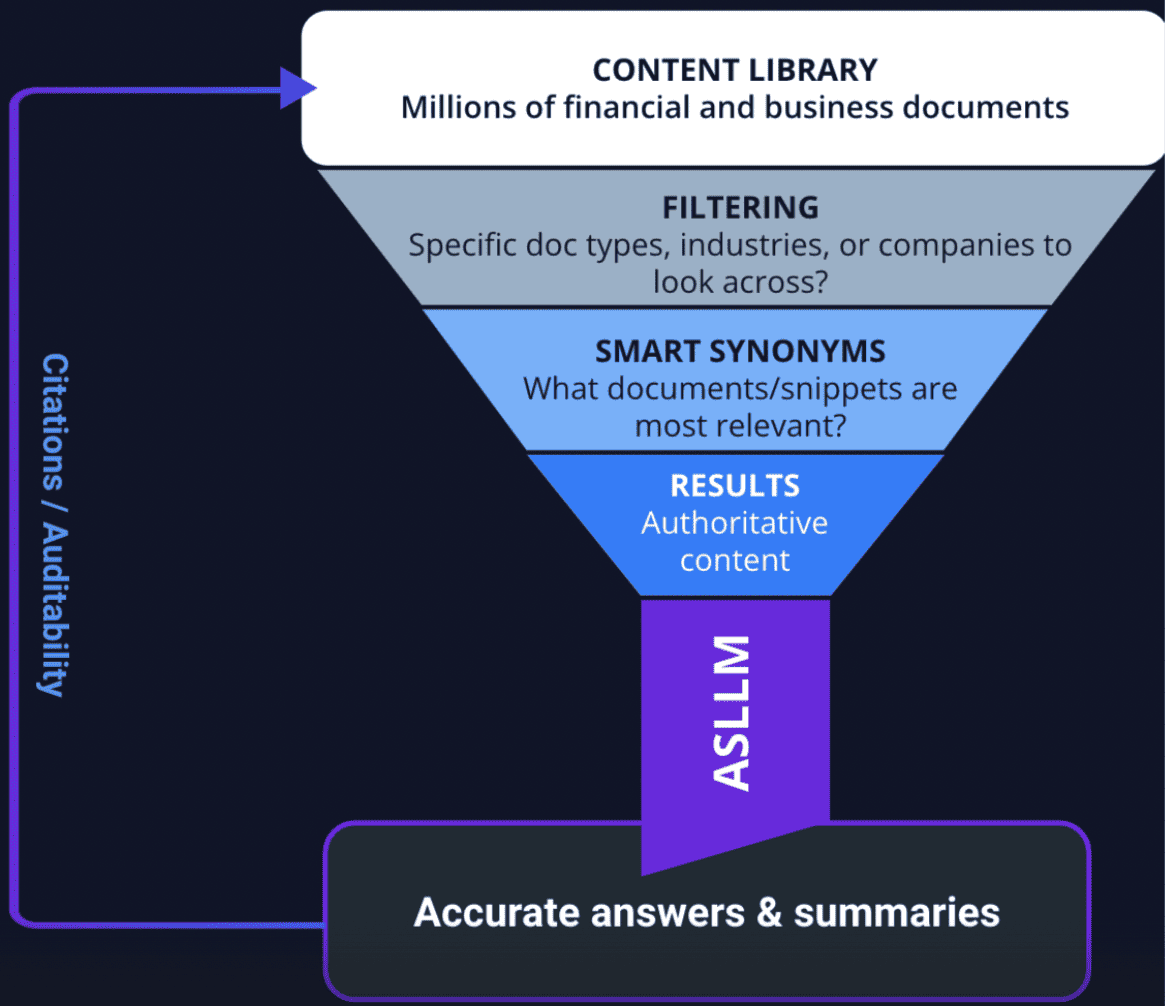

Prioritize a Model That Integrates RAG

Retrieval-augmented generation (RAG) grounds a large language model in authoritative content by asking it to only pull from a specified dataset, rather than the entire dataset it was trained on. For enterprise organizations or investment firms, RAG is a methodical way to fine-tune the LLM of whatever genAI tool they are using. Not only does it ensure that the answers being pulled are coming from the most relevant data sources for the research at hand, but it can also be fine-tuned to reason like an analyst or corporate professional would.

For example, AlphaSense employs a RAG model in its LLM that is trained on our extensive library of premium business and financial content, including equity research from leading Wall Street brokers. Furthermore, the model is trained on specific tasks that our customers have to do daily, such as earnings analysis, SWOT analysis, competitive landscaping, and more.

How AlphaSense Avoids GenAI Hallucination

AlphaSense is a secure, end-to-end market intelligence platform that enables business and financial professionals to centralize their proprietary internal content alongside an out-of-the-box collection of 300M+ premium external documents. By layering cutting-edge AI and generative AI features over this content, AlphaSense enhances and accelerates market research, resulting in increased team productivity and collaboration.

Our industry-leading suite of generative AI tools is purpose-built to deliver business-grade insights, leaning on 10+ years of AI tech development. This includes our RAG approach and our proprietary AlphaSense Large Language Model (ASLLM)—trained specifically on business and financial data.

Our genAI suite of tools currently includes:

This feature allows you to glean instant earnings insights (reducing time spent on research during earnings season), quickly capture company outlook, and generate an expert-approved SWOT analysis straight from former competitors, partners, and employees. All Summaries provide you with citations to the exact snippets of text from where the content is sourced—combining high accuracy with easy verification. We apply this same technology to our collection of expert calls.

Our generative AI chat experience transforms how users can extract insights from hundreds of millions of premium content sources. Our chatbot is trained to think like an analyst, so it understands the market research intent behind your natural language queries. Whenever you search for information, generative AI is there to summarize key company events, emerging topics, and industry-wide viewpoints. You can dig deeper into topics by asking follow-up questions or choosing a suggested query.

Here’s how AlphaSense stands apart from its competitors and prevents genAI hallucination:

Purpose-Built to Deliver Business-Grade Insights

AlphaSense has spent over a decade building out its AI tech stack and creating AI tools that are uniquely positioned to radically reduce research time and manual effort for knowledge workers. AlphaSense’s original collection of AI tools includes:

- Smart Synonyms – An intelligent search feature that captures language variations by sourcing all applicable synonyms and applying them only in the correct contexts based on the intent of your search.

- Theme Extraction – A method of extracting and ranking the most important trending topics and themes affecting companies and industries. AlphaSense processes millions of documents in real-time to alert you to what’s happening.

- Sentiment Analysis – A feature that enables users to identify, quantify, and analyze levels of emotion in human language. AlphaSense performs sentiment analysis at the phrase-level to enable sentiment search and aggregates those scores at the document and company level to enable macro trend analysis.

- Company Recognition – a home-grown solution for recognition, inter-company disambiguation, and salience classification of company mentions across AlphaSense content.

- Relevancy Scoring – A multi-factor model that takes into account semantics, source, document structure, recency, and entity aboutness to deliver the most relevant documents to the top of the results list.

Having this foundation of AI tools, purpose-built for financial and business insights, has enabled us to create generative AI that is specifically designed to enhance and accelerate knowledge workers’ workflows.

Verifiable Down to the Exact Snippet

Unlike the opacity of most other generative AI tools, AlphaSense links to the exact snippets of text within documents that drive summarized insights, allowing users to instantly validate any information generated and gain trust in the model.

Our Smart Summaries are generated only from insightful text snippets in high-quality sourced documents within the platform—meaning that each insight can be verified. Clearly labeled citations create total transparency, and users are able to quickly “check the work” of the AI summaries and gain additional context for their research.

High-Quality, Premium Content

Language models rely entirely on high-quality content to generate good information. While most genAI models on the market today pull data from the public web—containing information that is often biased, inaccurate, or untrustworthy—generative AI tools within AlphaSense parse exclusive and coveted content sources from our proprietary content universe.

Our library of content includes high-value, premium sources such as broker research reports and expert transcript interviews, as well as company documents, regulatory filings, and government publications. Since our genAI pulls from these premium sources when generating output you can be sure that every summarization is high-quality, credible, and business-grade.

Furthermore, our generative AI leverages our Expert Insights library, which is proprietary to AlphaSense and provides you with differentiated insights you won’t find anywhere else.

Finally, our Enterprise Intelligence solution enables you to securely sync your organization’s internal content into AlphaSense, so that our genAI model can produce insights that are uniquely tailored to your company and industry.

Safe and Responsible AI

Our platform leverages an end-to-end encryption of customer information, a zero-trust security model, flexible deployments, and robust entitlement awareness. Our secure cloud environment complies with global security standards—SOC2, ISO27001 compliant, conducts regular, accredited third-party penetration testing. Additionally, we offer FIPS 140-2 standard encryption on all content, as well as SAML 2.0 integration to support user authentication.

Smart Summaries has been built with the most security and privacy-conscious companies in mind. All queries and data fed into and generated by Smart Summaries remain within the platform, and no third parties are involved, which means there is never a risk of losing sensitive data. And because our model is trained on premium financial and business data, companies can be confident that the threat of bias, inaccuracy, or misinformation is eliminated when using our platform.

Generative AI You Can Trust For Your High-Value Research

In today’s age of fast-moving markets and information overload, a genAI market research solution is integral for organizations to stay efficient, competitive, and confident in their research. But genAI tools are not all created equal, and it’s important to select a tool that does not put your organization or your data at risk.

AlphaSense is your one-stop solution for premium business and financial content—which includes everything you need for holistic market research—and the generative AI technology to help you maximize the value you get from the data. Our platform goes above and beyond to ensure that your data is safe and secure, and that you reap all the benefits of generative AI technology with minimal risk.

Discover the power of genAI-fueled market research, and see how AlphaSense can help you conduct research with unparalleled confidence and speed. Start your free trial today.

Check out this case study to learn how ODDO BHF, one of the largest private banks in Germany, used AlphaSense and its genAI capabilities to streamline insights and get the competitive edge.

.png)